BELKA unleashed

We're releasing over 4B physical measurements to the community on Polaris

Today, we provide more protein-small molecule interaction data than exists in the public domain all put together. Let’s put it into some context.

A brief history of cataloging biomedicine

In 1836, Thomas Lawson, the United States Army Surgeon General, started the National Library of Medicine (NLM). As reported by historian Wyndham D. Miles, “The entire collection could have been held by a four-shelf bookcase, shoulder high and 7 or 8 feet wide.” Within half a century, the collection had grown to about 50,000 books and 60,000 pamphlets (great little history of NLM in ref 1).

Some 150 years later, the NLM formed the National Center for Biotechnology Information (NCBI; great little history of NCBI in ref 2), spearheaded by Allan Maxam and others. There was a growing appreciation of the importance of molecular genetics in human health and also a growing appreciation of the scale of that data. To make accessing such data easier, NCBI was founded to

design, develop, implement, and manage automated systems for the collection, storage, retrieval, analysis, and dissemination of knowledge concerning human molecular biology, biochemistry, and genetics;

and

perform research into advanced methods of computer-based information processing capable of representing and analyzing the vast number of biologically important molecules and compounds.

Full text of the bill creating NCBI from 1988’s US Congress in ref 3. It’s striking how prescient it was in terms of the measurement technologies coming up: the Human Genome Project would start two years later and take until 2003 before completion (genome nerds know that’s not quite right).

A brief history of measuring parts of biomedicine at scale

In parallel, there were technologies emerging to actually measure this sort of data at scale. DNA microarrays were invented in the early 1990s (ref 4, ref 5), enabling the measurements of thousands of genes and their expression levels in a single experiment. As many labs began performing such experiments, the community scrambled for ways to leverage the ever-increasing amount of data rolling in. This led to the creation of the NCBI Gene Expression Omnibus (NCBI GEO) in 2001 (ref 6), which as of this writing has about 7.5M samples of high-dimensional datasets (stats in ref 7). With the advent of high-throughput DNA sequencing, the number of studies really took off, including the NIH ENCODE project (ref 8) and the Epigenomic Roadmap (ref 9), datasets that have been used by others with smart analysis approaches (ref 10). Single-cell methods have only increased this pace of data generation.

There is a lot of nucleic acid-based data of living systems out there now.

Noting this explosion of omics data, other groups started to industrialize the production of phenotypic data. Anne Carpenter’s group at the Broad Institute and others began systematically imaging cells in culture that had received a variety of perturbations, publishing a large collection of these images in 2013 (ref 11).

A brief history of enabling machine learning methods on biomedicine

NCBI GEO and other collections like it are fantastic for the preservation of omics data but if one wants to leverage such data for machine learning approaches there’s still more work to be done. To facilitate such methods, groups like Kaggle (founded in 2010, ref 12) started hosting datasets and providing the infrastructure to enable ML competitions on those datasets. More platforms have sprung up with the explicit purpose of hosting ML-ready datasets for a variety of problems. Valence Labs started Polaris to host benchmarks for drug discovery, for example.

Recursion hosted a Kaggle competition with its own images in 2019 (ref 13) and has had multiple large-scale data releases in the time since (ref 14). Other groups in the life sciences started going the Kaggle route, releasing their own collections of data in hopes of engaging the community (ref 15, ref 16).

To say that we at Leash were inspired by Recursion’s Kaggle competition is an understatement. Andrew Blevins and I were both at Recursion while Berton Earnshaw and Ben Mabey spearheaded the Kaggle effort, and they were strongly encouraged by the culture of open science seeded by Chris Gibson, Blake Borgeson, and Dean Li when they started the company. Those datasets helped the biological computer vision community enormously, and given how tricky data generation at scale can be, we saw the value in opening up big, well-controlled datasets to enable the community to develop more robust methods.

It’s time to apply scale to chemistry data (ref 17, ref 18)

When we started Leash, we naively assumed that because NCBI GEO was built to handle nucleic acid datasets and overflowing with them that we’d have no trouble finding DNA-encoded chemical library datasets to build infrastructure and train models on. How wrong we were! There weren’t any that we could find. We complained about it on this very blog (ref 19). We were so mad, we vowed to release some the moment we could.

That led us to BELKA, a Kaggle competition where we released ~100M molecules tested against 3 protein targets for binding, and challenged the machine learning community to use that data to predict small molecule binding in other corners of chemical space (ref 20). Turns out it’s hard the farther away from the training set you get (ref 21).

It’s also hard constructing a Kaggle competition. If you want to do your molecule splits correctly - such that your models don’t cheat and memorize building blocks, for example - you have to be very delicate to make sure there are enough examples to train on and enough to test on. It’s tricky to come up with a way to score which competitors are doing better; we actually had to change the metric halfway through the competition (ref 22). We chose to make the problem one of classification - “does this molecule bind or not”, instead of predicting some continuous binding score - and that choice irritated some folks who didn’t like that we hid how we chose that bind-or-not threshold (ref 23, totally fair criticism). Kaggle also insists that the winners reveal their solutions/models/addition datasets publicly, and if you’re a private company, you probably don’t want to do that.

To truly make improvements on things like how to draw that threshold, or what background to use, the raw data simply have to be available for folks to try different methods. To make public comparisons on machine learning methods used by any group, public or private, the Kaggle contest collection has to be available without restrictions. We also noticed that a lot of ligand prediction work uses pose as a benchmark - “given that this molecule binds, where does it bind?” - and we think that’s the wrong task. We believe the right task is “out of this large collection of molecules, rank them on their likelihood of binding”, and there simply aren’t good widely-accepted benchmarks for this problem.

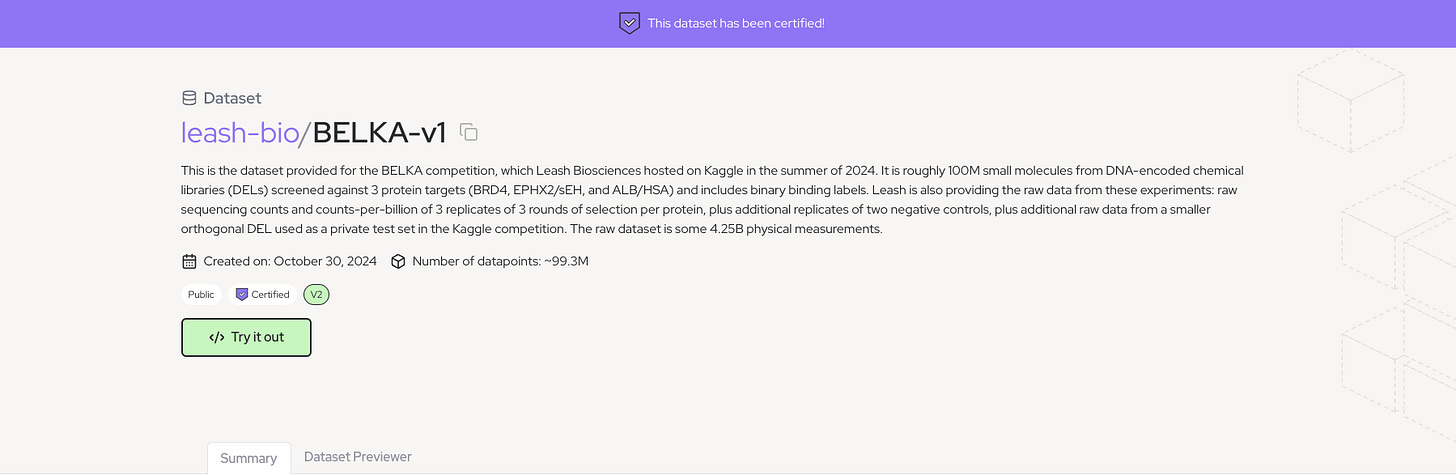

So we decided to release BELKA on Polaris (see here), a platform designed for such datasets, for folks to improve their methods and use it as a benchmark to compare those methods. We’re releasing all of BELKA: every molecule, every replicate, every sequencing read count, all the background controls we used, all the building blocks, everything. Please use it! You can read more about the dataset details on the Polaris README (ref 24).

BELKA is big. In raw form it’s even bigger

The raw data composing BELKA is ~4.25B physical measurements, mostly from 133M molecules across 3 protein targets, 3 rounds of selection for each, and each of those was done in triplicate, plus replicates of multiple background controls, plus even more screens against a smaller DEL (536k molecules) that we used as a particularly challenging test set in the Kaggle competition.

BELKA is bigger than anything else out there. It’s bigger than Pubchem bioactivities (295,468,175, ref 25). It’s bigger than DrugBank at 23k (ref 26) or Chembl at 22M (ref 27). It’s 1000x bigger than BindingDB is 2.9M (ref 28). As we’ve said (ref 29), it was collected at the same time with the same reagents in the same facility with the same single pair of hands (hands attached to Brayden Halverson), to minimize batch effects. We hope the community finds it useful.

We collected the BELKA data in the summer of 2023 in Leash’s original lab, the one we built in the basement of my house (ref 30). Mostly in the time since, other groups have released DNA-encoded chemical library datasets (ref 31, ref 32, ref 33). Notably, insitro released a big one about two weeks ago (ref 34). We love that more and more groups are open-sourcing datasets and methods to help all of us get a deeper understanding of biology and to smoothen the path to useful therapeutic material.

At Leash, we are proud to be part of this journey of the dissemination and use of life science information to ease human suffering, and we’re grateful to be doing it at a time when scalable technologies have the potential to make a massive impact. Please use our data and methods to help get treatments and technologies to those in need!